The AI genie is out of the bottle in Hollywood.

From scriptwriting assistants to synthetic performers, from AI-generated backgrounds to deepfake de-aging, artificial intelligence has moved from R&D curiosity to everyday production tool in less than three years. But here’s what keeps entertainment lawyers up at night: most production companies are flying blind.

They’re using tools they don’t fully understand, under terms of service they haven’t read, creating assets with uncertain copyright status, and leaving themselves exposed to litigation from performers, guilds, and rights holders who are increasingly organized and litigious.

The studios that thrive in this environment won’t be the ones who avoid AI—that ship has sailed. They’ll be the ones with airtight internal policies that let them harness AI’s capabilities while maintaining defensible legal positions.

We’ve identified the twelve non-negotiable rules that every AI filmmaking policy must address. This article outlines what those rules cover and why they matter.

The Stakes: Why “We’ll Figure It Out Later” Is No Longer an Option

Consider what’s happened in the past eighteen months alone:

The Copyright Office drew a line. The Thaler decisions and subsequent guidance made clear: purely AI-generated content isn’t copyrightable. If your AI policy doesn’t ensure human authorship, your deliverables may have no IP protection at all.

The unions got specific. SAG-AFTRA’s 2023 contract introduced detailed provisions on digital replicas and synthetic performers. Productions that created AI likenesses without proper consent frameworks are now scrambling to remediate.

Training data lawsuits multiplied. Getty, artists’ collectives, and authors are aggressively litigating against AI companies—and the downstream users of those tools may not be indemnified.

The window for “move fast and figure out compliance later” has closed. The question isn’t whether your company needs an AI policy. It’s whether your policy is comprehensive enough to actually protect you.

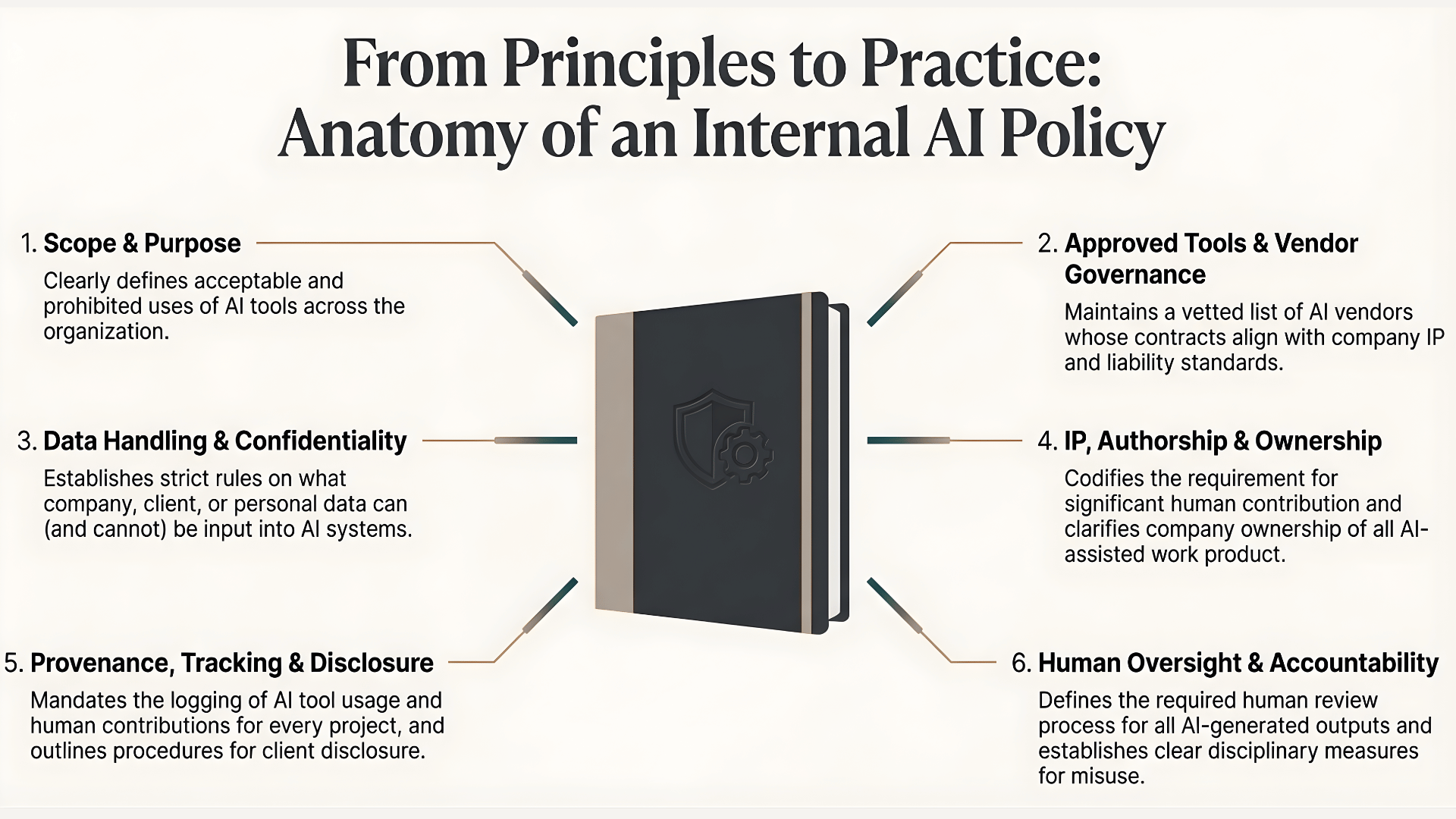

The 12 Rules: A Framework for Defensible AI Governance

Rule 1: Preserve Human Authorship

The problem: AI-generated content isn’t copyrightable. If your final deliverables are substantially AI-created, you may have no enforceable IP rights—and no ability to prevent competitors from copying your work.

What your policy needs: Clear requirements that human creators exercise “substantial creative control” over all deliverables, with documentation standards that can prove it in court if challenged.

The nuance most policies miss: It’s not enough to have a human press “generate.” Your policy must define what constitutes meaningful human transformation and require contemporaneous documentation of creative contributions.

Rule 2: Implement Tiered Disclosure Requirements

The problem: Disclosure obligations vary dramatically based on context—from internal brainstorming (minimal requirements) to public-facing synthetic media (strict platform and regulatory mandates).

What your policy needs: A clear matrix specifying what must be disclosed, to whom, and in what format for each category of AI use.

The nuance most policies miss: Machine-readable metadata requirements. Platforms like YouTube and Meta now require specific labeling for synthetic content. A policy that only addresses human-readable disclosures is already outdated.

Rule 3: Lock Down Performer Consent

The problem: Digital replicas and voice clones created without proper consent are litigation time bombs. And “proper consent” is far more complex than a standard release form.

What your policy needs: Consent frameworks that address living performers, deceased performers (estate authorization), compensation structures, use-case specificity, and—critically—retirement and destruction provisions for when licenses expire.

The nuance most policies miss: Union overlay. For signatory productions, your consent protocols must satisfy collective bargaining requirements, which may exceed your standard contractual protections.

Rule 4: Verify Training Data Provenance

The problem: If your AI vendor trained on unlicensed content, you may be liable for infringement—even if you had no knowledge of the training data composition.

What your policy needs: Mandatory vendor certifications regarding training data, a prohibition on using production assets for model training without authorization, and a register of all AI tools deployed by project.

The nuance most policies miss: Terms of service review. Many AI vendors’ ToS include clauses allowing them to use your inputs to improve their models. Your unreleased script could become training data for a competitor’s project.

Rule 5: Mandate Secured Processing Environments

The problem: An employee pasting a confidential script into ChatGPT isn’t just a policy violation—it’s a potential trade secret waiver that could torpedo an entire production.

What your policy needs: Explicit prohibitions on using public AI tools for confidential work, requirements for enterprise-secured environments with data deletion guarantees, and special protocols for high-sensitivity projects.

The nuance most policies miss: Personal device policies. Your enterprise security means nothing if contractors are running confidential materials through consumer AI tools on their phones.

Rule 6: Require Structured Human Review

The problem: AI systems hallucinate, exhibit bias, and produce inaccuracies. Releasing AI-assisted content without proper review exposes you to defamation claims, factual challenges, and reputational damage.

What your policy needs: Review requirements calibrated by content type—with heightened scrutiny for documentary and factual content where AI-generated imagery could mislead audiences about real events.

The nuance most policies miss: Bias screening. AI tools can embed demographic biases that create PR and legal exposure. Your review protocols need to specifically address this.

Rule 7: Build a Provenance System That Will Hold Up in Court

This is where most policies fail catastrophically.

The problem: When a performer claims their likeness was used without consent, when a copyright holder alleges infringement, when a regulator demands documentation—you need an unbroken chain of custody proving exactly what AI tools were used, what inputs were provided, what human modifications were made, and who authorized each step.

What your policy needs:

- Asset-level documentation capturing the AI tool, version, inputs, transformation history, and authorization chain for every AI-assisted deliverable

- Technical provenance standards including C2PA Content Credentials, cryptographic hashing, and metadata integrity requirements

- Retention policies ensuring records survive for the duration of copyright protection

- Audit protocols enabling you to demonstrate compliance to regulators, courts, and clients

The nuance most policies miss: Almost everything. Most “AI policies” we review have a single paragraph on documentation. That’s not a provenance system—it’s a liability.

A defensible provenance framework is the difference between being able to prove your compliance and hoping no one asks.

Rule 8: Assess Vendors Before You’re Locked In

The problem: By the time you discover your AI vendor’s training data is legally compromised or their indemnification is worthless, you’ve already built a production around their tools.

What your policy needs: A structured pre-deployment assessment covering data security, IP risk, regulatory compliance, operational stability, and ethical safeguards—with minimum contractual requirements that must be satisfied before any tool is approved.

The nuance most policies miss: Indemnification specificity. Generic indemnification clauses often exclude the exact scenarios you need coverage for. Your assessment framework should include a term-by-term evaluation of vendor liability provisions.

Rule 9: Map Vendor Terms Against Client Obligations

The problem: Your AI vendor grants you a limited license to outputs. Your client contract requires full IP assignment. Congratulations—you just made a promise you can’t keep.

What your policy needs: A compliance mapping process that reconciles vendor terms with client obligations before tools are deployed on specific projects.

The nuance most policies miss: This entire rule. It’s remarkable how many production companies treat vendor management and client delivery as separate silos—until a conflict explodes.

Rule 10: Classify Use Cases by Risk

The problem: Applying the same approval requirements to a brainstorming session and a synthetic performer resurrection is either going to grind production to a halt or leave critical decisions unsupervised.

What your policy needs: A risk classification matrix with clear criteria, specific examples, and escalating approval authorities—from department-level sign-off for low-risk uses to C-suite and performer approval for synthetic replicas.

The nuance most policies miss: Escalation triggers. What happens when a project’s scope changes and a Level 2 use case becomes Level 4? Your policy needs reclassification protocols with real-time escalation requirements.

Rule 11: Plan for When Things Go Wrong

The problem: Even with perfect policies, incidents happen—unauthorized tool deployments, data breaches, consent disputes, regulatory inquiries. The question is whether you have a response framework or you’re improvising under pressure.

What your policy needs: Defined incident categories, response timelines, notification chains, asset quarantine procedures, and root cause analysis requirements.

The nuance most policies miss: This entire rule. Most AI policies we review have zero incident response provisions. They’re compliance frameworks with no enforcement mechanism.

Rule 12: Train Your People (And Prove It)

The problem: A policy that exists only in a shared drive folder isn’t a policy—it’s a liability shield with holes in it.

What your policy needs: Mandatory annual training for all personnel authorized to use AI tools, certification tracking, and acknowledgment requirements for contractors and vendors.

The nuance most policies miss: Specificity. “Training on AI policy” isn’t enough. Your program needs to cover the exact scenarios your people will face—consent workflows, provenance documentation, escalation triggers—with practical exercises, not just slide decks.

The Bottom Line: Policies Are Competitive Advantage

The production companies that will dominate the AI era aren’t the ones with the most advanced tools. They’re the ones who can use those tools confidently—knowing they have defensible positions on authorship, consent, provenance, and compliance.

A rigorous AI policy isn’t bureaucratic overhead. It’s what lets you say yes to ambitious AI-assisted projects while your competitors are still trying to figure out if they’re allowed.

How We Help

At AI Strategy Law, we build AI governance frameworks for production companies—from independent studios to major streamers. Our engagements typically include:

Policy Development Custom AI filmmaking policies tailored to your production slate, client relationships, and risk tolerance—not templates adapted from other industries.

Provenance System Design Technical and procedural frameworks for documentation, metadata standards, and audit trails that will hold up under legal scrutiny.

Vendor Assessment Structured evaluations of AI tools and services, including contract negotiation to secure appropriate indemnification and data protection terms.

Training Programs Role-specific training for creative, legal, and production teams—with practical scenarios and certification tracking.

Incident Response Planning Playbooks for the scenarios you hope won’t happen but need to be prepared for.

Ready to Build Your AI Policy?

Most production companies we talk to know they need better AI governance. They just don’t know where to start.

Start with a conversation. We’ll assess where you are, identify your highest-risk gaps, and outline what a comprehensive policy would look like for your specific situation.

Need AI Compliance Help?

Let us help you navigate AI compliance with confidence and clarity.

Get in Touch